The human brain is capable of achieving many wonders, and the progress of mankind is a living testament to that fact. However, humans used their intelligence to create computers, which are capable of processing some tasks better than humans. For example, computers could calculate the square root of 0.000016 or bring you a web page instantly at your request. If you want to learn artificial neural networks (ANNs), you must understand the analogy between computers and the human brain.

While computers could process complex tasks instantaneously, the human brain could outperform computers in imagination, common sense, and creativity. Artificial neural networks (ANNs) have been designed by taking inspiration from the human brain structure. The objective of ANNs focuses on helping machines reason like humans.

As you are reading this article, your brain is processing information to understand whatever you read. The brain works with numerous nerve cells or neurons working in coordination with each other. Neurons receive sensory inputs from the external world and process the inputs to provide the outputs, which could serve as inputs for the next neuron.

You might be wondering why an artificial neural networks tutorial requires a description of the workings of the human brain. Since artificial neural networks are tailored on the basis of neurons in the human brain, it is important to draw analogies between the human brain and ANNs. Let us learn more about artificial neural networks, how they work, and their different applications.

What is an Artificial Neural Network (ANNs)?

Human brains can interpret the context in real-world situations comprehensively, unlike computers. Neural networks were developed to address this problem. The answers to ‘What is artificial neural networks?’ focus primarily on the purpose of ANNs. Artificial neural networks are an attempt to simulate the network of neurons that are the basic blocks of the human brain.

As a result, computers could learn things like humans and make decisions in a similar manner. In technical terms, artificial neural networks are computational models which have been tailored according to neural structure of the human brain. You can also define ANNs as algorithms that leverage brain function models for interpreting complicated patterns and forecasting issues.

The artificial neural network definition also paints them as deep learning algorithms tailored according to design of the human brain. Just like our neurons could learn from past data, artificial neural networks could also learn from historical data and offer responses as classifications or predictions. In addition, some definitions of artificial neural networks paint them as non-linear statistical models that establish a complex interaction between inputs and outputs for discovering a new pattern.

The most promising advantage of ANN is the flexibility for learning from example datasets. For example, ANN could use random function approximation for cost-effective approaches to obtain solutions for different use cases. Any artificial neural network example would show that ANNs could take sample data for providing the output. On top of it, ANNs could also help in leveraging advanced predictive capabilities for enhancing existing data analysis techniques.

Excited to learn the fundamentals of AI applications in business? Enroll now in the AI For Business Course

How Do Artificial Neurons Compare Against Biological Neurons?

The guides on artificial neural networks draw similarities with biological neurons. You can find multiple similarities in the structure and functions between artificial neurons and biological neurons. Here are the prominent aspects on which you can compare artificial neurons with biological neurons.

-

Structure

The first thing you would notice in a comparison between artificial neurons and biological neurons in an artificial neural networks tutorial points at the structure. Artificial neurons have been modeled after biological neurons. Biological neurons have a cell body for processing the impulses, dendrites for receiving impulses, and axons for transferring impulses to other neurons.

On the other hand, input nodes in artificial neural networks receive the input signals while the hidden layer processes the input signals. The output layer leverages activation functions for processing the results of the hidden layer to generate the final output.

-

Synapses

The responses to “What is artificial neural networks?” also point to the comparison of synapses in ANNs and biological neurons. Synapses serve as the link between biological neurons for transmitting impulses from the dendrites to the cell body. In the case of artificial neurons, synapses are the weights that connect one-layer nodes to the next-layer nodes. The weight value helps in determining the strength of the links.

-

Activation

Another crucial aspect for comparing artificial neurons with biological neurons points to activation. In the case of biological neurons, activation refers to the firing rate of neurons for strong impulses that can reach the threshold. On the contrary, activation in artificial neurons points to an activation function that facilitates mapping of inputs to outputs, followed by executing activations.

-

Learning

You can also evaluate the effectiveness of artificial neural network algorithm against the human brain by considering the element of learning. Learning in biological neurons takes place in the nucleus of the cell body, which helps in processing the impulses. The impulse processing stage leads to generation of an action potential, which travels through axons for powerful impulses.

The transfer of impulses is possible due to the ability of synapses to change their strength according to modifications in activity. On the other hand, artificial neural networks utilize back-propagation techniques for learning. It involves adjustment of weights between the nodes on the basis of errors or discrepancies between desired and actual outputs.

Want to understand the importance of ethics in AI, ethical frameworks, principles, and challenges? Enroll now in the Ethics Of Artificial Intelligence (AI) Course

Architecture of Artificial Neural Networks

Now that you know about the ways in which artificial neurons are related to biological neurons, you should learn about the architecture of ANNs. You can learn artificial neural networks by exploring the functionalities of different layers in their architecture. The architecture of artificial neural networks includes three layers such as the input layer, the hidden layer, and the output layer.

-

Input Layer

The input layer is the foremost layer in an artificial neural network. It works on receiving the input information from external sources. The input data could be available in the form of text, numbers, images, or audio files.

-

Hidden Layer

The middle layer of artificial neural networks includes the hidden layers. You could find one or multiple hidden layers in ANNs. The hidden layer in an artificial neural network example serves as a distillation layer. It works on extracting the relevant patterns from input data and transfers them to the next layer for analysis.

It can help accelerate and improve the efficiency of the artificial neural network by identifying only the most important patterns from the input. As a result, the hidden layer serves as the ideal choice for performing different types of mathematical computation tasks on input data.

-

Output Layer

The output layer focuses primarily on obtaining the results on the basis of rigorous mathematical computations by the hidden layer.

Another crucial aspect in the explanation of artificial neural network definition points to the use of parameters and hyperparameters. The parameters and hyperparameters are responsible for influencing the performance of the neural network. As a matter of fact, the output of artificial neural networks depends on these parameters. Some of the notable examples of parameters for ANNs include weights, batch size, biases, and learning rate. It is important to note that every node in the ANN has some weight.

Artificial neural networks utilize a transfer function for determining the weighted sum of inputs alongside the bias. Once the transfer function completes calculation of the sum, the activation function will generate the result. The activation functions would trigger execution according to the received output. Examples of popular activation functions for ANNs include Softmax, Sigmoid, and RELU.

Identify new ways to leverage the full potential of generative AI in business use cases and become an expert in generative AI technologies with Generative AI Skill Path

What is Back-propagation in Artificial Neural Networks?

ANNs generate the final output value by using activation functions. In addition, the working of an artificial neural network algorithm would also involve error functions. The error functions can help in calculating the differences between the desired and actual output for adjusting the weights of the neural network. The process of adjusting weights is back-propagation.

The training process of artificial neural networks involves providing examples of input-output mappings. For example, you can teach an ANN to recognize a dog. First of all, you have to show thousands of images of dogs to help the ANN in identifying a dog. After training the artificial neural network example with multiple images of dogs, you should check whether it can identify dogs in an image. You can achieve this by classification of images to help in deciding whether a specific image includes a dog. The output of the ANN is then checked against a human description of whether the image has a dog in it.

In the case of incorrect responses by the ANN, you have to use back-propagation to adjust the weights learned in the training process. The process of back-propagation focuses on fine-tuning the weights of connections in ANN units according to the error rate. Subsequently, the process must continue iteratively until the ANN successfully recognizes an image with a dog in it.

Want to develop the skill in ChatGPT to familiarize yourself with the AI language model? Enroll now in ChatGPT Fundamentals Course

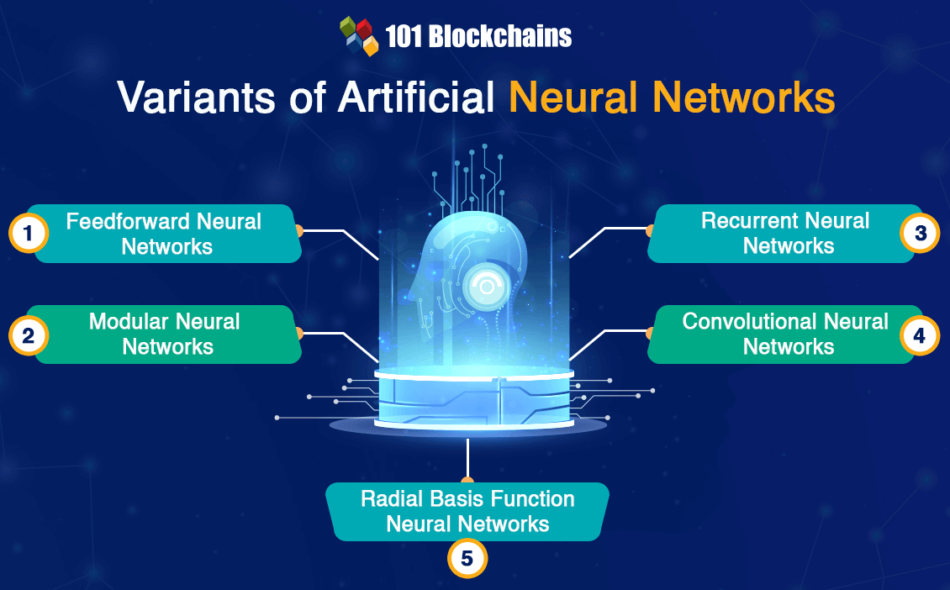

Variants of Artificial Neural Networks

Another crucial aspect in a guide to ANNs beyond questions like “What is artificial neural networks?” would point at the different types of artificial neural networks. You can find different variants of ANNs, such as feedforward neural networks, convolutional neural networks, modular neural networks, recurrent neural networks, and radial basis function neural networks. Here is an overview of the distinct highlights of each type of artificial neural network.

-

Feedforward Neural Networks

Feedforward neural networks are the most fundamental variant of artificial neural networks. In such types of ANN, the input data travels in a single direction and exits through the output layer. Feedforward neural networks may or may not have hidden layers and do not rely on back-propagation.

-

Modular Neural Networks

Modular neural networks include a collection of multiple neural networks working independently to achieve the output. The different neural networks perform a unique sub-task with unique inputs. The advantage of modular neural networks is the ability to reduce complexity by breaking down complex and large computational processes.

-

Recurrent Neural Networks

Recurrent neural networks are also a common addition to artificial neural networks tutorial among the variants of ANNs. The recurrent neural networks work by saving the output of a layer and feeding back the output to the input to provide better predictions for outcomes of the layer.

-

Convolutional Neural Networks

Convolutional neural networks share some similarities with feedforward neural networks. However, convoluted neural networks have one or multiple convolutional layers that utilize a convolution operation for the input before passing the result. Convolutional neural networks can find promising applications in the field of speech and image processing.

-

Radial Basis Function Neural Networks

Radial basis functions are also another prominent example of artificial neural network algorithm variants. The radial basis functions work by accounting for the distance of a point with respect to the center. Radial basis functions feature two distinct layers which have distinct functionalities. Such types of neural networks also use radial basis function nets for modeling data that could represent underlying functions or trends.

Excited to learn about the fundamentals of AI and Fintech? Enroll now in AI And Fintech Masterclass

What are the Prominent Examples of Artificial Neural Networks?

The unique properties of artificial neural networks make them one of the most trusted choices for different use cases. Here are some of the notable use cases of artificial neural networks.

- Recognizing handwritten characters.

- Speech recognition.

- Signature classification.

- Facial recognition.

The applications of artificial neural networks ensure that you can implement them in different sectors, such as healthcare, social media marketing, and sales.

Become a master of generative AI applications by developing expert-level skills in prompt engineering with Prompt Engineer Career Path

Conclusion

The introduction to artificial neural networks explained their importance in simulating human-like intelligence and reasoning in machines. Starting from the artificial neural network definition to their applications, you learned how ANNs could revolutionize machine learning. Artificial neural networks work through three distinct layers in their architecture such as input layer, hidden layer, and output layer.

In addition, you can also notice the impact of back-propagation on improving accuracy of outputs by ANNs. As the world begins embracing artificial intelligence for everyday activities, it is important to learn about artificial neural networks and their working. Explore the best training resources to familiarize yourself with the fundamentals of artificial neural networks and understand their importance for the future of AI.

The post An Introduction to Artificial Neural Networks (ANNs) appeared first on 101 Blockchains.